The internet is buzzing with bot traffic. In fact, nearly half of all online traffic in 2023 was estimated to be invalid, mainly consisting of bots crawling through websites.

Not all bots are bad, though. Some are helpful, like search engine crawlers, for example. But others, like click bots or spam bots, can mess up your analytics and make it hard to evaluate your traffic.

That’s why Google Analytics 4 (GA4) plays an integral role in the process of identifying bot traffic and filtering out malicious ones. As a tool that contains records of all your website visitors, it can serve as an initial indicator of bot activity.

In this article, we will explore the secrets of how you can use GA4 to identify bot traffic and take some actions to minimize its presence on your website.

What exactly is bot traffic?

Bot traffic refers to automated website visits carried out by computer programs rather than human users. These bots serve various purposes, ranging from indexing content for search engines to analyzing the healthiness of your website… and also, unfortunately, stealing your data, spreading spam, or committing click fraud.

Good bots, like search engine bots, play a helpful role in indexing and ranking your site. They contribute to the visibility of your content in search results, which makes them a must-have for the overall healthiness of your website.

However, bad bots, such as click bots or spam bots, are the ones you need to watch out for. They can mislead your analytics by artificially inflating pageviews and sessions or triggering false interactions.

What is bot traffic in GA4?

When it comes to GA4, bot traffic refers to the above-described automated visits. As such, this traffic does not represent a genuine interest in your product or service, and if it infiltrates your analytics, it will impact your data accuracy.

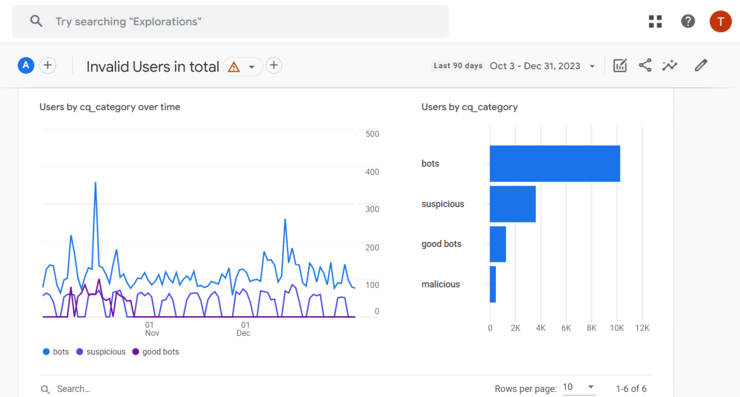

It’s important to mention here that GA4 automatically excludes web traffic from known good bots and spiders. This applies to bots with well-established identities and consistent behavior.

Search engine bots and known SEO tools like Ahrefs and Semrush fall under this category, and their traffic wouldn’t show up in your GA4. However, some new or less popular bots, no matter good or bad, will still appear in your Google Analytics reports. It takes time for Google to detect repetitive visits across websites coming from the same sources or sources showing specific patterns.

And while GA4 automatically clears your reports from the helpful bots, web traffic from the malicious bots still makes its way into your analytics data.

But how can you recognize it and avoid its impact on your data and further activities? Let’s explore how you can do that in GA4.

How can you identify bot traffic in GA4?

Identifying bot traffic in Google Analytics involves using a combination of built-in features and additional settings. While GA4 includes some automatic mechanisms for filtering out known bots, it’s important to implement additional measures to enhance bot detection. Let’s explore some.

Customize your reports

The default reports in GA4 don’t contain all the metrics that can distinguish between bot and human traffic. So, your number one task is to customize your report data in a way that will help you recognize the signs of bot traffic.

Our recommendations? The Traffic acquisition and User acquisition reports are the ones that hold most of these key metrics you’d like to have included, so let’s start from there.

Here’s how to access these reports in your Google Analytics 4 account:

- Sign in to your Google Analytics account.

- Select “Reports” from the left menu.

- Expand the “Acquisition” section in the Life cycle collection.

- Choose the desired report:

- Traffic Acquisition report

- User Acquisition report

Once there, you can start customizing your report by clicking the “Customize Report” button (pencil icon) in the upper right corner. Select “Metrics” under Report data, and you can start with adding metrics.

Here’s our recommendation of metrics to have included under each of these two reports:

Traffic acquisition report:

- Engagement rate

- Average engagement time

- Sessions

- Engaged sessions

- Views per session

User acquisition report:

- Total users

- Users (Represents the number of active users)

- New users

- Event count

- Engaged sessions per user

Recognizing suspicious patterns

Here’s how to identify suspicious patterns in your data:

Behavioral data:

- Short engagement sessions: Bots often spend seconds on a page, unlike humans. Check the engagement rate (the formerly known bounce rate in GA) and average session duration for anomalies.

- Unrealistic page views: Hundreds of pages viewed in a single session could indicate scraping bots.

- Unusual interactions: Unnatural scroll patterns, rapid form fills, or visiting multiple pages with no mouse movement are examples of bot-specific interactions.

- A sudden flow of spam comments: Spam bots main purpose is to land on your website and spread spammy comments. They often promote unrelated products, use flattery language, or include irrelevant links. You can recognize them by the unnatural and generic language.

- Declined card transactions: Fraudsters usually use stolen or non-existent credit card information. Many attempts to complete a transaction mean they’re trying different variations or the card they’ve stolen has been blocked.

Demographic data:

- Traffic spikes from a single IP address: Botnets or malicious scripts can generate high traffic volumes in a short time span. Sudden, massive increases in web traffic originating from a single IP address are a clear sign of bot traffic.

- Unlikely origins: Traffic spikes from countries you don’t target or visits classified as ‘Location not set’ might be bots. Unusual sources, such as unfamiliar websites, data centers, or crawlers with unusual user agents, are another sign of suspicious traffic.

- Unusual traffic sources: A sudden influx of uncommon devices or operating systems could be suspicious and indicate bot traffic. For example, a spike in new user acquisition by a single traffic source is a sign of suspicious activity.

How to filter bot traffic in GA4?

Now that you know how to recognize early signs of bot traffic in Google Analytics 4, let’s see how you can exclude it from appearing there in the first place.

While GA4 doesn’t provide the same level of granular filtering as Universal Analytics, there are some features that can be used for this purpose.

Use custom filters

With custom filters in Google Analytics 4, you can modify or segment the data in your reports based on specific conditions. By applying your preferred criteria, you can tailor the data you see in your reports. This way, you’ll filter out suspicious or known bot traffic sources from messing up your data.

To access custom filters in GA4, navigate to the “Analysis” section and click “Reports.” Once you are in a report, you can find the “Filter” button at the top of the page.

You can create custom filters based on various conditions, such as events, user properties, or other dimensions.

Choose the dimension you want to filter and set the conditions for inclusion or exclusion.

There are two types of filters you can find: “Include filter” and “Exclude filter.” As their names are self-explanatory, the first one is used if you want to include a particular type of traffic, and the latter is for the traffic you want to exclude. When filtering out bot traffic, you’ll naturally want to use the ‘exclude’ filter.

You can set conditions based on various parameters, such as country, user properties, equality, inequality, regular expressions, etc.

For example, you might create a filter to exclude users who stay less than 10 seconds on your website.

Create segments

The purpose of the “Create segments” feature in GA4 is to help you define and save specific subsets of your data. This can also be done based on various criteria, and together with segments, you’ll be able to analyze and understand the behavior of specific groups of users or events within your overall data set.

To create segments in GA4, navigate to the “Explore” tab in your GA4 property. Once there, look for the “Segment builder” in the top left corner.

Under segment conditions, you can:

- Exclude known bots:

- Add a condition like “User Agent does not contain” and list common bot user agents (e.g., “Googlebot,” “Bingbot,” “SemrushBot”).

- Filter by IP addresses:

- If you have specific IP addresses associated with bots, create a condition like “IP Address does not equal” and list those IPs.

- Examine events and engagement:

- Add conditions based on suspicious event patterns or low engagement metrics:

- “Event count is less than X”

- “Session duration is less than Y seconds”

- “Pageviews per session is equal to 1”

- Add conditions based on suspicious event patterns or low engagement metrics:

Configure unwanted referrals

In GA4, you can use the referral exclusion list to exclude certain domains from being counted as referrals. You can block up to 50 unwanted referrals per data stream to exclude known bot or spam referral traffic.

To set up the referral exclusion list, go to your GA4 property settings, navigate to “Data Streams,” and click on the relevant data stream. From there, you can find the “Referral Exclusion List” section.

Block bot traffic with a bot mitigation solution

While these strategies can improve data reliability, they don’t guarantee the absence of bot traffic in your reports. GA4 filters can exclude bots from reports, yet it’s not foolproof against their access to your website.

To truly combat bot and spam traffic, prevention is key. It’s not enough to simply filter them out of your Google Analytics data. You need to stop them from accessing your website and online activities altogether.

As a bot detection and protection solution, ClickCease blocks any type of bot targeting your ads, organic online activities, or website. This means your overall online traffic gets protected, ensuring accurate, reliable data in your Google Analytics 4.

Ensure full protection against bots.

FAQs:

How do I know if I have bot traffic?

In your traffic reports in Google Analytics 4, you can spot suspicious user activity, usually common to bot traffic.

Look out for unusual patterns like sudden spikes in events and conversions. For example, a user might be viewing many pages or items in a short time, which might be a sign of bots crawling your website rapidly to collect as much information as possible.

Additionally, in the “Network Domains” report, you can identify traffic from unusual domains, which most likely come from bots.

Another common sign of bot traffic is low time on page (or high bounce rate). Bots usually have very low time spent on pages. Examine these metrics to identify pages that might be attracting bot traffic.

Some other anomalies include spikes in traffic, specific pages with abnormal patterns, or sudden drops in conversion rates.

If you notice these signs in your GA4, there’s a chance that bots are affecting your traffic, and you may want to investigate further or implement measures to mitigate their impact.

Does GA4 block bot traffic?

Google Analytics 4 automatic blocking excludes traffic from bots and spiders, which are already identified as such. This includes traffic from already known tools that serve for SEO or other beneficial website activities, as well as lists of already known malicious bot traffic sources.

This helps keep your data relatively clean and prevents skewed metrics about user behavior.

What are the limitations of GA4 bot filtering?

While Google Analytics 4 automatically excludes known sources of bot traffic, there are many new bots, especially malicious ones, that are not detected by the automatic filters.

This means you’ll need to take manual measures to identify and filter out the remaining unwanted activities from your reports. However, there’s not a single report or metric that can represent signs of bot traffic.

It takes a combination of reports to come to some insights. Sometimes, there’s no clear sign to distinguish between a bot and genuine user activity, which makes the process more complex.

For more accurate bot management, it’s recommended to consider further protection. These could include security plugins, CAPTCHAs, and even dedicated anti-bot services.